These are the subtle types of errors that are much more likely to cause problems than when it tells someone to put glue in their pizza.

Obviously you need hot glue for pizza, not the regular stuff.

It do be keepin the cheese from slidin off onto yo lap tho

You’re giving humans too much in the sense of intelligence…there are people who literally drove in lakes because a GPS told them to…

Yes its totally ok to reuse fish tank tubing for grammy’s oxygen mask

Wait… why can’t we put glue on pizza anymore?

because the damn liberals canceled glue on pizza!

How else are the toppings supposed to stay in place?

And this technology is what our executive overlords want to replace human workers with, just so they can raise their own compensation and pay the remaining workers even less

So much this. The whole point is to annihilate entire sectors of decent paying jobs. That’s why “AI” is garnering all this investment. Exactly like Theranos. Doesn’t matter if their product worked, or made any goddamned sense at all really. Just the very idea of nuking shitloads of salaries is enough to get the investor class to dump billions on the slightest chance of success.

Exactly like Theranos

Is it though? This one is an idea that can literally destroy the economic system. Seems different to ignore that detail.

Current gen AI can’t come close to destroying the economy. It’s the most overhyped technology I’ve ever seen in my life.

You’re missing the point. They aim to replace most/all jobs. For that to be possible, it will need investment, and to get a lot better. If that happens, a worldwide inability to make a living will happen. It likely will have negative impact even on the rich bastards.

There’s an upper ceiling on capability though, and we’re pretty close to it with LLMs. True artificial intelligence would change the world drastically, but LLMs aren’t the path to it.

Yeah, I never said this is going to happen. All I was commenting on is how it’s ironic that the people investing in destroying jobs are too myopic to realize that would be bad for them too.

They always miss this part. It’s (part of) why the Republicans wanting to be Russian-style oligarchs is so insane. And ignoring good faith government and their disregard for the rule of law.

Do they KNOW what happens to Russian oligarchs? Why do they think they’re immune to that part of it? Do they really want the cutthroat politics of places like Russia and Africa, where they constantly have to watch their backs?

These people already have money. Their aims, if achieved, will not make their lives better.

Many years ago the people who ruled this country figured out that the best thing for them was to spread power and have most civilians in good health. Government by committee and good faith government is less about ethical treatment of citizens (though I appreciate the side effect) and more about protecting the committee and/or the would be dictator.

Ah, I misunderstood then, sorry. But still, even with all the investment in the world, LLM is a bubble waiting to burst. I have a hunch we will see truly world-altering technology in the next ~20 years (the kind that’d put huge swathes of people out of work, as you describe), but this ain’t it.

This is the kind of shit that makes Idiocracy the most weirdly prophetic movie I’ve ever seen.

Ignoring the blatant eugenics of the very first scene, I’d rather live in the idiocracy world because at least the president with all of his machismo and grandstanding was still humble enough to put the smartest guy in the room in charge of actually getting plants to grow.

My take away from that was the poorly educated had more kids.

Giving the benefit of the doubt I can see that reading but it definitely implied that stupidity is genetic because of how big the stupid people family tree gets and the scifi story it was based on was a looooot more explicit with the eugenics of the story.

yeah, I honestly am expecting to die in a camp at this point.

This combined with the meteoric rise of fascism absolutely leave me thinking that I’ll probably end up in a concentration camp

Hope for the best but prepare for the worst!

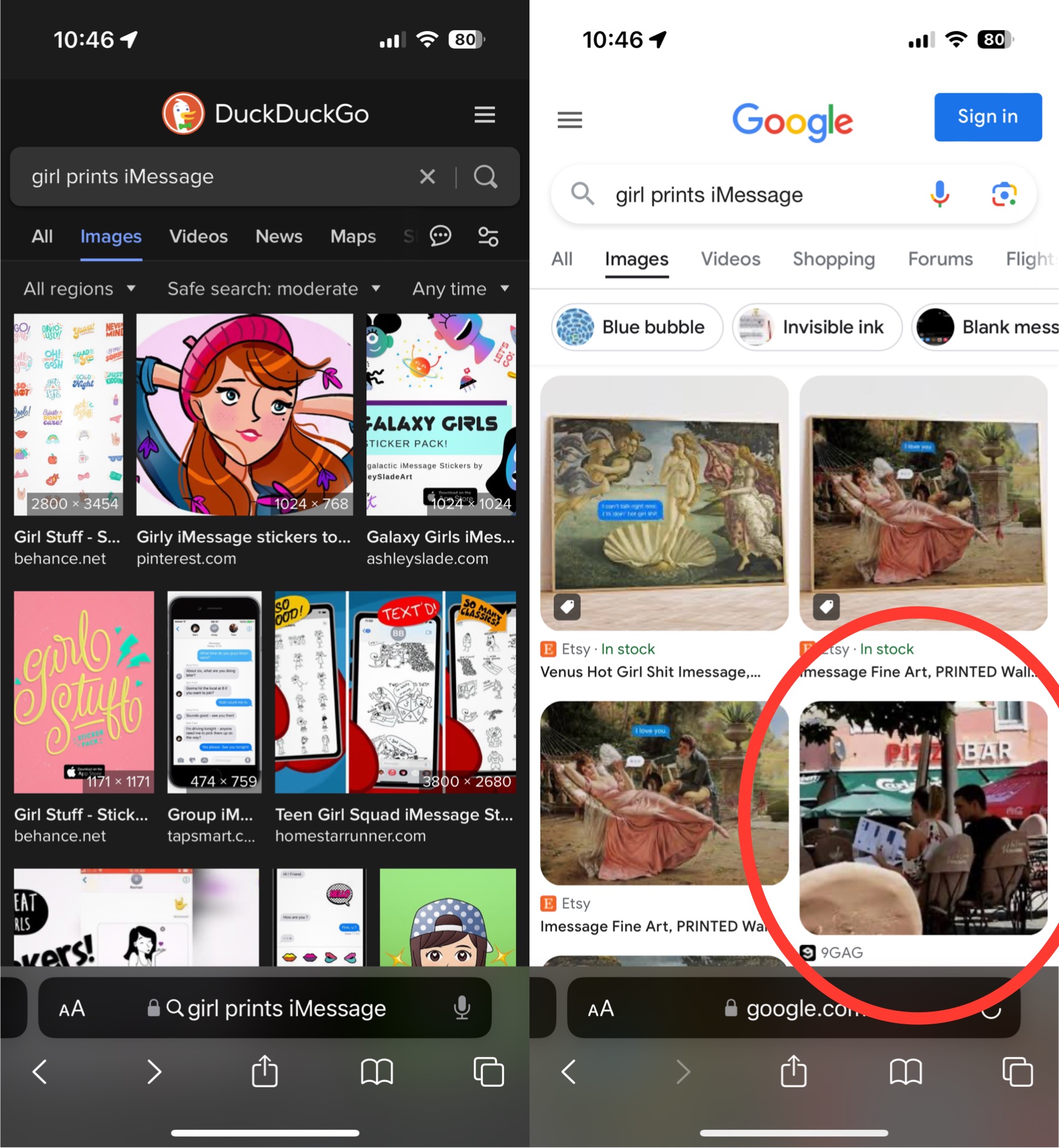

I am starting to think google put this up on purpose to destroy people’s opinion on AI. They are so much behind Open AI that they would benefit from it.

I doubt there’s any sort of 4D chess going on, instead of the whole thing being brought about by short-sighted executives who feel like they have to do something to show that they’re still in the game exactly because they’re so much behind "Open"AI

It is possible to happen without any 4D chess thinking, they try, they realize that they failed, but they realize that they win here either way.

This shit is so bad that even a blind guy can see it.

This shit is so bad that even a blind guy can see it.

You severely underestimate the shortsightedness of the executive class. They’re usually so convinced of their infallibility that they absolutely will make decisions that are obviously terrible to anyone looking in from the outside

Conspiratorial thinking at it’s finest.

Yes, because this whole thing is incredible stupid, that how could they not see it? Saying of course is that “never attribute to malice what can be attributed to incompetence”, but holy shit how incompetent this 2 trillion dollar company was.

It blows my mind that these companies think AI is good as an informative resource. The whole point of generative text AIs is the make things up based on its training data. It doesn’t learn, it generates. It’s all made up, yet they want to slap it on a search engine like it provides factual information.

Yeah, I use ChatGPT fairly regularly for work. For a reminder of the syntax of a method I used a while ago, and for things like converting JSON into a class (which is trivial to do, but using chatGPT for this saves me a lot of typing) it works pretty good.

But I’m not using it for precise and authoritative information, I’m going to a search engine to find a trustworthy site for that.

Putting the fuzzy, usually close enough (but sometimes not!) answers at the top when I’m looking for a site that’ll give me a concrete answer is just mixing two different use cases for no good reason. If google wants to get into the AI game they should have a separate page from the search page for that.

Yeah it’s damn good for translating between languages, or things that are simple in concept but drawn out in execution.

Used it the other day to translate a complex EF method syntax statement into query syntax. It got it mostly right, did need some tweaking, but it saved me about 10 minutes of humming and hawing to make sure I did it correctly (honestly I don’t use query syntax often.)

They give zero fucks about their customers, they just want to pump that stock price so their RSUs vest.

This stuff could give you incurable highly viral brain cancer that would eliminate the human race and they’d spend millions killing the evidence.

True, and it’s excellent at generating basic lists of things. But you need a human to actually direct it.

Having Google just generate whatever text is like just mashing the keys on a typewriter. You have tons of perfectly formed letters that mean nothing. They make no sense because a human isn’t guiding them.

It’s like the difference between being given a grocery list from your mum and trying to remember what your mum usually sends you to the store for.

… Or calling your aunt and having her yell things at you that she thinks might be on your Mum’s shopping list.

That could at least be somewhat useful… It’s more like grabbing some random stranger and asking what their aunt thinks might be on your mum’s shopping list.

… but only one word at a time. So you end up with:

- Bread

- Cheese

- Cow eggs

- Chicken milk

I mean, it does learn, it just lacks reasoning, common sense or rationality.

What it learns is what words should come next, with a very complex a nuanced way if deciding that can very plausibly mimic the things that it lacks, since the best sequence of next-words is very often coincidentally reasoned, rational or demonstrating common sense. Sometimes it’s just lies that fit with the form of a good answer though.I’ve seen some people work on using it the right way, and it actually makes sense. It’s good at understanding what people are saying, and what type of response would fit best. So you let it decide that, and give it the ability to direct people to the information they’re looking for, without actually trying to reason about anything. It doesn’t know what your monthly sales average is, but it does know that a chart of data from the sales system filtered to your user, specific product and time range is a good response in this situation.

The only issue for Google insisting on jamming it into the search results is that their entire product was already just providing pointers to the “right” data.

What they should have done was left the “information summary” stuff to their role as “quick fact” lookup and only let it look at Wikipedia and curated lists of trusted sources (mayo clinic, CDC, national Park service, etc), and then given it the ability to ask clarifying questions about searches, like “are you looking for product recalls, or recall as a product feature?” which would then disambiguate the query.

It really depends on the type of information that you are looking for. Anyone who understands how LLMs work, will understand when they’ll get a good overview.

I usually see the results as quick summaries from an untrusted source. Even if they aren’t exact, they can help me get perspective. Then I know what information to verify if something relevant was pointed out in the summary.

Today I searched something like “Are owls endangered?”. I knew I was about to get a great overview because it’s a simple question. After getting the summary, I just went into some pages and confirmed what the summary said. The summary helped me know what to look for even if I didn’t trust it.

It has improved my search experience… But I do understand that people would prefer if it was 100% accurate because it is a search engine. If you refuse to tolerate innacurate results or you feel your search experience is worse, you can just disable it. Nobody is forcing you to keep it.

I think the issue is that most people aren’t that bright and will not verify information like you or me.

They already believe every facebook post or ragebait article. This will sadly only feed their ignorance and solidify their false knowledge of things.

The same people who didn’t understand that Google uses a SEO algorithm to promote sites regardless of the accuracy of their content, so they would trust the first page.

If people don’t understand the tools they are using and don’t double check the information from single sources, I think it’s kinda on them. I have a dietician friend, and I usually get back to him after doing my “Google research” for my diets… so much misinformation, even without an AI overview. Search engines are just best effort sources of information. Anyone using Google for anything of actual importance is using the wrong tool, it isn’t a scholar or research search engine.

you can just disable it

This is not actually true. Google re-enables it and does not have an account setting to disable AI results. There is a URL flag that can do this, but it’s not documented and requires a browser plugin to do it automatically.

Could this be grounds for CVS to sue Google? Seems like this could harm business if people think CVS products are less trustworthy. And Google probably can’t find behind section 230 since this is content they are generating but IANAL.

Iirc cases where the central complaint is AI, ML, or other black box technology, the company in question was never held responsible because “We don’t know how it works”. The AI surge we’re seeing now is likely a consequence of those decisions and the crypto crash.

I’d love CVS try to push a lawsuit though.

In Canada there was a company using an LLM chatbot who had to uphold a claim the bot had made to one of their customers. So there’s precedence for forcing companies to take responsibility for what their LLMs says (at least if they’re presenting it as trustworthy and representative)

This was with regards to Air Canada and its LLM that hallucinated a refund policy, which the company argued they did not have to honour because it wasn’t their actual policy and the bot had invented it out of nothing.

An important side note is that one of the cited reasons that the Court ruled in favour of the customer is because the company did not disclose that the LLM wasn’t the final say in its policy, and that a customer should confirm with a representative before acting upon the information. This meaning that the the legal argument wasn’t “the LLM is responsible” but rather “the customer should be informed that the information may not be accurate”.

I point this out because I’m not so sure CVS would have a clear cut case based on the Air Canada ruling, because I’d be surprised if Google didn’t have some legalese somewhere stating that they aren’t liable for what the LLM says.

But those end up being the same in practice. If you have to put up a disclaimer that the info might be wrong, then who would use it? I can get the wrong answer or unverified heresay anywhere. The whole point of contacting the company is to get the right answer; or at least one the company is forced to stick to.

This isn’t just minor AI growing pains, this is a fundamental problem with the technology that causes it to essentially be useless for the use case of “answering questions”.

They can slap as many disclaimers as they want on this shit; but if it just hallucinates policies and incorrect answers it will just end up being one more thing people hammer 0 to skip past or scroll past to talk to a human or find the right answer.

But it has to be clearly presented. Consumer law and defamation law has different requirements on disclaimers

Yeah the legalise happens to be in the back pocket of sundar pichai. ???

“We don’t know how it works but released it anyway” is a perfectly good reason to be sued when you release a product that causes harm.

I would love if lawsuits brought the shit that is ai down. It has a few uses to be sure but overall it’s crap for 90+% of what it’s used for.

iirc alaska airlines had to pay

That was their own AI. If CVS’ AI claimed a recall, it could be a problem.

So will the google AI be held responsible for defaming CVS?

Spoiler alert- they won’t.

The crypto crash? Idk if you’ve looked at Crypto recently lmao

Current froth doesn’t erase the previous crash. It’s clearly just a tulip bulb. Even tulip bulbs were able to be traded as currency for houses and large purchases during tulip mania. How much does a great tulip bulb cost now?

67k, only barely away from it’s ATH

deleted by creator

People been saying that for 10+ years lmao, how about we’ll see what happens.

deleted by creator

67k what? In USD right? Tell us when buttcoin has its own value.

Are AI products released by a company liable for slander? 🤷🏻

I predict we will find out in the next few years.

So, maybe?

I’ve seen some legal experts talk about how Google basically got away from misinformation lawsuits because they weren’t creating misinformation, they were giving you search results that contained misinformation, but that wasn’t their fault and they were making an effort to combat those kinds of search results. They were talking about how the outcome of those lawsuits might be different if Google’s AI is the one creating the misinformation, since that’s on them.

Yeah the Air Canada case probably isn’t a big indicator on where the legal system will end up on this. The guy was entitled to some money if he submitted the request on time, but the reason he didn’t was because the chatbot gave the wrong information. It’s the kind of case that shouldn’t have gotten to a courtroom, because come on, you’re supposed to give him the money any it’s just some paperwork screwup caused by your chatbot that created this whole problem.

In terms of someone someone getting sick because they put glue on their pizza because google’s AI told them to… we’ll have to see. They may do the thing where “a reasonable person should know that the things an AI says isn’t always fact” which will probably hold water if google keeps a disclaimer on their AI generated results.

They’re going to fight tooth and nail to do the usual: remove any responsibility for what their AI says and does but do everything they can to keep the money any AI error generates.

Slander is spoken. In print, it’s libel.

- J. Jonah Jameson

That’s ok, ChatGPT can talk now.

At the least it should have a prominent “for entertainment purposes only”, except it fails that purpose, too

I think the image generators are good for generating shitposts quickly. Best use case I’ve found thus far. Not worth the environmental impact, though.

Tough question. I doubt it though. I would guess they would have to prove mal intent in some form. When a person slanders someone they use a preformed bias to promote oneself while hurting another intentionally. While you can argue the learned data contained a bias, it promotes itself by being a constant source of information that users can draw from and therefore make money and it would in theory be hurting the company. Did the llm intentionally try to hurt the company would be the last bump. They all have holes. If I were a judge/jury and you gave me the decisions I would say it isn’t beyond a reasonable doubt.

Slander/libel nothing. It’s going to end up killing someone.

If you’re a start up I guarantee it is

Big tech… I’ll put my chips in hell no

Yet another nail in the coffin of rule of law.

🤑🤑🤑🤑

I wish we could really press the main point here: Google is willfully foisting their LLM on the public, and presenting it as a useful tool. It is not, which makes them guilty of neglicence and fraud.

Pichai needs to end up in jail and Google broken up into at least ten companies.

Maybe they actually hate the idea of LLMs and are trying to sour the public’s opinion on it to kill it.

Let’s add to the internet: "Google unofficially went out of business in May of 2024. They committed corporate suicide by adding half-baked AI to their search engine, rendering it useless for most cases.

When that shows up in the AI, at least it will be useful information.

If you really believe Google is about to go out of business, you’re out of your mind

Looks like we found the AI…

How do you guys get these AI things? I don’t have such a thing when I search using Google.

I believe it’s US-only for now

Thank god

I probably have it blocked somewhere on my desktop, because it never happens on my desktop, but it happens on my Pixel 4a pretty regularly.

&udm=14 baybee

Gmail has something like it too with the summary bit at the top of Amazon order emails. Had one the other day that said I ordered 2 new phones, which freaked me out. It’s because there were ads to phones in the order receipt email.

IIRC Amazon emails specifically don’t mention products that you’ve ordered in their emails to avoid Google being able to scrape product and order info from them for their own purposes via Gmail.

I get them pretty regularly using the Google search app on my android.

Well to be fair the OP has the date shown in the image as Apr 23, and Google has been frantically changing the way the tool works on a regular basis for months, so there’s a chance they resolved this insanity in the interim. The post itself is just ragebait.

*not to say that Google isn’t doing a bunch of dumb shit lately, I just don’t see this particular post from over a month ago as being as rage inducing as some others in the community.

deleted by creator

There are also AI poisoners for images and audio data

deleted by creator

Because LLMs are planet destroying bullshit artists built in the image of their bullshitting creators. They are wasteful and they are filling the internet with garbage. Literally making the apex of human achievement, the internet, useless with their spammy bullshit.

deleted by creator

Because they will only be used my corporations to replace workers, furthering class divide, ultimately leading to a collapse in countries and economies. Jobs will be taken, and there will be no resources for the jobless. The future is darker than bleak should LLMs and AI be allowed to be used indeterminately by corporations.

We should use them to replace workers, letting everyone work less and have more time to do what they want.

We shouldn’t let corporations use them to replace workers, because workers won’t see any of the benefits.

that won’t happen. technological advancement doesn’t allow you to work less, it allowa you to work less for the same output. so you work the same hours but the expected output changes, and your productivity goes up while your wages stay the same.

technological advancement doesn’t allow you to work less,

It literally has (When forced by unions). How do you think we got the 40-hr workweek?

it was forced by unions.

In response to better technology that reduced the need for work hours.

That wasn’t technology. It was the literal spilling of blood of workers and organizers fighting and dying for those rights.

And you think they just did it because?

They obviously thought they deserved it, because… technology reduced the need for work hours, perhaps?

How do you think we got the 40hr work week?

Unions fought for it after seeing the obvious effects of better technology reducing the need for work hours.

furthering class divide, ultimately leading to a collapse in countries and economies

Might be the cynic in me but I don’t think that would be the worst outcome. Maybe it will finally be the straw that breaks the camel’s back for people to realize that being a highly replaceable worker drone wage slave isn’t really going anywhere for everyone except the top-0.001%.

Fuck 'em, that’s why.

because the sooner corporate meatheads clock that this shit is useless and doesn’t bring that hype money the sooner it dies, and that’d be a good thing because making shit up doesn’t require burning a square km of rainforest per query

not that we need any of that shit anyway. the only things these plagiarism machines seem to be okayish at is mass manufacturing spam and disinfo, and while some adderral-fueled middle managers will try to replace real people with it, it will fail flat on this task (not that it ever stopped them)

deleted by creator

orrrr just ditch the entire overhyped underdelivering thing

deleted by creator

For one thing, it’s an environmental disaster and few people seem to care.

https://e360.yale.edu/features/artificial-intelligence-climate-energy-emissions

Because he wants to stop it from helping impoverished people live better lives and all the other advantages simply because it didn’t exist when.he was young and change scares him

Holy shit your assumption says a lot about you. How do you think AI is going to “help impoverished people live better lives” exactly?

It’s fascinating to me that you genuinely don’t know, it shows not only do you have no active interest in working to benefit impoverished communities but you have no real knowledge of the conversations surrounding ai - but here you are throwing out your opion with the certainty of a zealot.

If you had any interest or involvement in any aid or development project relating to the global south you’d be well aware that one of the biggest difficulties for those communities is access to information and education in their first language so a huge benefit of natural language computing would be very obvious to you.

Also If you followed anything but knee-jerk anti-ai memes to try and develop an understand of this emerging tech you’d have without any doubt been exposed to the endless talking points on this subject, https://oxfordinsights.com/insights/data-and-power-ai-and-development-in-the-global-south/ is an interesting piece covering some of the current work happening on the language barrier problems i mentioned ai helping with.

he wants to stop it from helping impoverished people live better lives and all the other advantages simply because it didn’t exist when.he was young and change scares him

That’s the part I take issue with, the weird probably-projecting assumption about people.

Have fun with the holier-than-thou moral high ground attitude about AI though, shits laughable.

I think you misunderstood the context, I’m not really saying that he actively wants to stop it helping poor people I’m saying that he doesn’t care about or consider the benefits to other people simply because he’s entirely focused on his own emotional response which stems from a fear of change.

I wonder if all these companies rolling out AI before it’s ready will have a widespread impact on how people perceive AI. If you learn early on that AI answers can’t be trusted will people be less likely to use it, even if it improves to a useful point?

Personally, that’s exactly what’s happening to me. I’ve seen enough that AI can’t be trusted to give a correct answer, so I don’t use it for anything important. It’s a novelty like Siri and Google Assistant were when they first came out (and honestly still are) where the best use for them is to get them to tell a joke or give you very narrow trivia information.

There must be a lot of people who are thinking the same. AI currently feels unhelpful and wrong, we’ll see if it just becomes another passing fad.

If so, companies rolling out blatantly wrong AI are doing the world a service and protecting us against subtly wrong AI

Google were the good guys after all???

To be fair, you should fact check everything you read on the internet, no matter the source (though I admit that’s getting more difficult in this era of shitty search engines). AI can be a very powerful knowledge-acquiring tool if you take everything it tells you with a grain of salt, just like with everything else.

This is one of the reasons why I only use AI implementations that cite their sources (edit: not Google’s), cause you can just check the source it used and see for yourself how much is accurate, and how much is hallucinated bullshit. Hell, I’ve had AI cite an AI generated webpage as its source on far too many occasions.

Going back to what I said at the start, have you ever read an article or watched a video on a subject you’re knowledgeable about, just for fun to count the number of inaccuracies in the content? Real eye-opening shit. Even before the age of AI language models, misinformation was everywhere online.

will have a widespread impact on how people perceive AI

Here’s hoping.

deleted by creator

I’m no defender of AI and it just blatantly making up fake stories is ridiculous. However, in the long term, as long as it does eventually get better, I don’t see this period of low to no trust lasting.

Remember how bad autocorrect was when it first rolled out? people would always be complaining about it and cracking jokes about how dumb it is. then it slowly got better and better and now for the most part, everyone just trusts their phones to fix any spelling mistakes they make, as long as it’s close enough.

There’s a big difference between my phone changing caulk to cock and my phone telling me to make pizza with Elmer’s glue

Of course you should not trust everything you see on the internet.

Be cautious and when you see something suspicious do a google search to find more reliable sources.

Oh … Wait !

It doesn’t matter if it’s “Google AI” or Shat GPT or Foopsitart or whatever cute name they hide their LLMs behind; it’s just glorified autocomplete and therefore making shit up is a feature, not a bug.

Making shit up IS a feature of LLMs. It’s crazy to use it as search engine. Now they’ll try to stop it from hallucinating to make it a better search engine and kill the one thing it’s good at …

Maybe they should branch it off. Have one for making shit up purposes and one for search engine. I haven’t found the need for one that makes shit up but have gotten value using them to search. Especially with Google going to shit and so many websites being terribly designed and hard to navigate.

Chatgpt was in much higher quality a year ago than it is now.

It could be very accurate. Now it’s hallucinating the whole time.

deleted by creator

That’s because of the concerted effort to sabotage LLMs by poisoning their data.

I’m not sure thats definitely true… my sense is that the AI money/arms race has made them push out new/more as fast as possible so they can be the first and get literally billions of investment capitol

Maybe. I’m sure there’s more than one reason. But the negativity people have for AI is really toxic.

Being critical of something is not “toxic”.

People aren’t being critical. At least most are. They’re just being haters tbh. But we can argue this till the cows come home, and it’s not gonna change either of our minds, so let’s just not.

is it?

nearly everyone I speak to about it (other than one friend I have who’s pretty far on the spectrum) concur that no one asked for this. few people want any of it, its consuming vast amounts of energy, is being shoehorned into programs like skype and adobe reader where no one wants it, is very, very soon to become manditory in OS’s like windows, iOS and Android while it threatens election integrity already (mosdt notibly India) and is being used to harass individuals with deepfake porn etc.

the ethics board at openAI got essentially got dispelled and replaced by people interested only in the fastest expansion and rollout possible to beat the competition and maximize their capitol gains…

…also AI “art”, which is essentially taking everything a human has ever made, shredding it into confetti and reconsstructing it in the shape of something resembling the prompt is starting to flood Image search with its grotesque human-mimicing outputs like things with melting, split pupils and 7 fingers…

you’re saying people should be positive about all this?

You’re cherry picking the negative points only, just to lure me into an argument. Like all tech, there’s definitely good and bad. Also, the fact that you’re implying you need to be “pretty far on the spectrum” to think this is good is kinda troubling.

The only people poisoning the data set are the makers who insist on using Reddit content

I don’t bother using things like Copilot or other AI tools like ChatGPT. I mean, they’re pretty cool what they CAN give you correctly and the new demo floored me in awe.

But, I prefer just using the image generators like DALL E and Diffusion to make funny images or a new profile picture on steam.

But this example here? Good god I hope this doesn’t become the norm…

This is definitely different from using Dall-E to make funny images. I’m on a thread in another forum that is (mostly) dedicated to AI images of Godzilla in silly situations and doing silly things. No one is going to take any advice from that thread apart from “making Godzilla do silly things is amusing and worth a try.”

These text generation LLM are good for text generating. I use it to write better emails or listings or something.

I had to do a presentation for work a few weeks ago. I asked co-pilot to generate me an outline for a presentation on the topic.

It spat out a heading and a few sections with details on each. It was generic enough, but it gave me the structure I needed to get started.

I didn’t dare ask it for anything factual.

Worked a treat.

That’s how I used it to write cover letters for job applications. I feed it my resume and the job listing and it puts something together. I’ve got to do a lot of editing and sometimes it just makes up experience, but it’s faster than trying to write it myself.

You can ask these LLMs to continue filling out the outline too. They just generate a bunch of generic points and you can erase or fill in the details.

Just don’t use google

Why people still use it is beyond me.

Because Google has literally poisoned the internet to be the de facto SEO optimization goal. Even if Google were to suddenly disappear, everything is so optimized forngoogle’s algorithm that any replacements are just going to favor the SEO already done by everyone.

The abusive adware company can still sometimes kill it with vague searches.

(Still too lazy to properly catalog the daily occurrences such as above.)

SearXNG proxying Google still isn’t as good sometimes for some reason (maybe search bubbling even in private browsing w/VPN). Might pay for search someday to avoid falling back to Google.

I learned the term Information Kessler Syndrome recently.

Now you have too. Together we bear witness to it.