I’ve seen a trend where people move the goalposts on the reasons they’re not able to switch. “If only this program worked I could switch”, but when that program is ported it’ll be a new excuse next. Sooner or later you’ll have to draw a line and say “99% of my stuff works, the 1% that doesn’t can get bent”.

Yote.zip

Every community I care about is dead

- 4 Posts

- 14 Comments

As a Debian user I agree with Loius Rossmann’s sage advice.

Edit: (make sure you enable unattended security upgrades at least so you can pretend that you only update once every few months)

694·1 year ago

694·1 year agoI’ll bet if you actually use it 24/7 they will throttle/disconnect you. “Oh I’m sorry you used up your 1TB limit. No one needs more than that per month! Are you doing something illegal???”

0·1 year ago

0·1 year ago“Project Silica’s goal is to write data in a piece of glass and store it on a shelf until it is needed. Once written, the data inside the glass is impossible to change.”

Very important note here.

0·1 year ago

0·1 year agoLaser printers are great also if you just need black.

3·1 year ago

3·1 year agoGrats on Arch Linux install, S-tier distro for what it attempts to accomplish.

It feels like there needs to be a category in between conservative and paranoid. I’m probably 90% of the way over to tech paranoid but using Tor Browser and Tails is a little much.

1·1 year ago

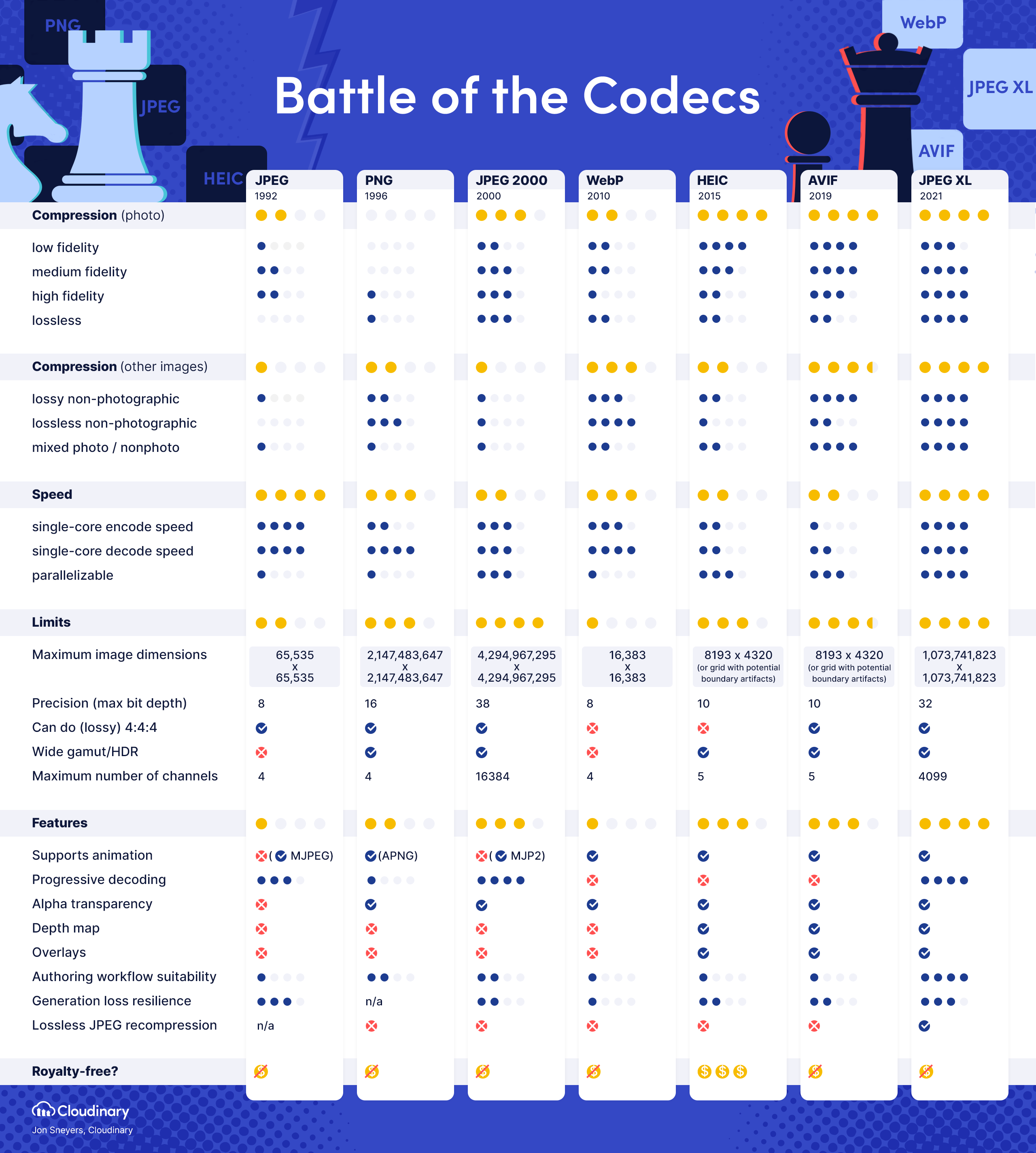

1·1 year agoWith effort level 7 you should be getting images roughly 2/3’s of the size of the original PNG on average (assuming the PNG is already properly optimized). I would try again with at least effort levels 3, 4, 5, and 7. Also consider that PNGs need very expensive CPU time to properly compress them, using a tool like oxipng.

What sort of balance are you looking for with regards to filesize and encode time? At the very least, effort levels 1 through 3 will probably still give you better results than PNG while being ridiculously quick, so there shouldn’t be any configuration where PNG is a better choice than JXL with regards to speed.

1·1 year ago

1·1 year agoJXL has been ready for practical use for a while now - the only place where JXL support is still missing is browsers (due to Google’s politically-motivated removal from chromium). I’m not sure if anyone has tried using JXL with ML, but it’s certainly ready to be tested right now. IMO JXL has been ready since their libJXL 0.7.0 release, which happened September 2022 last year. They’re still working towards a 1.0 but every image-related application has built-in support for JXL already and it can more or less be considered ready.

haven’t seen any major downsides, besides less optimal performance for very low resolution images

Just to note here, to be precise AVIF starts (barely) winning at low fidelity ranges, not low resolution. Meaning if you want a blurry mess that looks like this, AVIF will compress slightly better (that’s an actual AVIF converted to PNG by the way).

At the risk of sounding like sour grapes, this compression advantage doesn’t truly matter. This level of compression is almost never used, and even if it was, even drastic relative filesize savings would ultimately amount to bytes/kilobytes in the grand scheme of all images you’re serving. It’s more impactful to compress large images simply because they are larger. Smaller images are already small and efficiency deltas in a 1kB vs 1.1kB image are meaningless compared to a 600kB vs 800kB image.

I also wonder if the support for progressive loading might be useful for more efficient, low resolution variants of high resolution models. Just store one set of high res images and load them in progressive steps to make smaller data sets. Like, say you have a bunch of 8k images, but you only want to make a website banner based on the model from those 8k res images. I wonder if it’s possible to use the the progressive loading support to halt reading in the images at 1k

I’m not fully confident on this aspect but I’m pretty sure that JXL supports more than just traditional progressive decoding - you can actually pull “complete” images out of the bitstream from arbitrary ranges. Meaning you could efficiently store a full range of quality options in just one image, then serve them on the fly.

Any time I see a big feature jump, like better file size, I assume the trade off in another feature negates at least half the benefit. It’s pretty rare, from what I’ve seen, to have improvements on all fronts.

JXL is self-described “alien technology from the future”, and it was made by a “dream team” of image engineers who have had a hand in just about every image codec and compression technique from our past. It also benefits from being a real image codec, whereas every recent image format that has gained widespread adoption has been derived from a video codec (WebP, AVIF, HEIC).

The only truly useful thing it doesn’t perform best-in-class at is animation encoding (losing to AVIF because it’s based on the amazing AV1 video codec), and I would honestly recommend just serving AV1 videos instead, and skipping image formats entirely.

A neutral aspect of JXL is that it does worse in single-core decode speed compared to JPEG (which is disgustingly fast), but JXL can be parallelized whereas JPEG cannot. This is ultimately an advantage for JXL for general usecase where users have at least 4 cores available, but for large-scale distributed processing I imagine this property of JPEG may still have an edge use-case?

If you’re curious about the technical aspects of JXL, I recommend reading their official slidedeck. The nitty-gritty details start at page 59, but the whole thing is a good read.

1·1 year ago

1·1 year agoNo one uses hardware decoding for images - it’s just not a good fit for the reality of how we use images. Images are small and easy to decode, whereas starting up a hardware decoder takes a non-trivial amount of time. Additionally, GPU decoders only work single-threaded, so each image would have to be decoded one by one, instead of all at once like with CPU decoding. This was already attempted with VP8/WebP and they gave up trying to make it any good. Videos are good candidates for hardware decoding since they’re large and you’re only looking at one at a time.

If you have benchmarks or some proof showing otherwise by all means post here.

0·1 year ago

0·1 year agoYou don’t need to use the high compression profiles to get good performance though. If you have a usecase where you are resource limited you should stick to effort levels 5-7 for very little loss in quality, or even 3-4 for lightning quick speed (the default is effort 7). Reference this benchmark against AVIF for effort values vs. speed (SSIMULACRA 2 is a deterministic psychovisual metric - higher is better).

Also, an important consideration in this realm is that JXL makes really clever use of variable-DCT (how big a chunk is) and adaptive quantization (what quality should be used for that chunk), allowing “quality levels” that you specify to be much more visually consistent across every image, instead of other codecs that make some images look bad at quality level 90 and some images look good at level 70. This allows you to select a consistent quality level and lower your encoding effort to compensate, instead of needing to always drive a high quality+effort level to account for every region in a picture looking good.

(If you want a slightly deeper dive into JXL’s performance, this is a concise post on various metrics)

1·1 year ago

1·1 year agoI think you forgot a pretty crucial point, that it is also royalty free.

I’ll go back and add it - there’s a lot of great stuff that I didn’t mention just for brevity. The biggest royalty concern is HEIC atm, which is basically a nonstarter. I’m not sure how the licensing on the other free formats compares against JXL.

I wonder how the Chrome team managed to test it so poorly they claimed it wasn’t worth it? Just the versatility alone should make it a no-brainer.

Make no mistake, it was a political killing. They didn’t kill it because of perceived performance, they killed it ahead of their public benchmarks because of “lack of interest”. Their cited lack of interest was determined after only a few months of the format going live behind opt-in experimental flags, and once they made their original decision, just about every large tech company spoke up in favor of JXL against Google’s decision on their bugtracker, including Adobe, Intel, Nvidia, Facebook, Shopify, and Flickr. Google still plugged their ears and pretended no one was interested.

Google is trying to push WebP (2.0?) and AVIF, and using their browser marketshare to kill JXL and make that happen. Why they went through all this trouble to kill a format that they themselves co-developed, I really have no idea. I follow JXL relatively closely and I still am not 100% sure why they went through with this. All I know is that the decision was politically-motivated, and without applying political/ecosystem pressure they’re not going to change their minds with data.

Edit: by the way, the last few comments still trickling in on that bugtracker are a great read, especially #406. #406 reads so similarly to my comment I’m surprised I didn’t write it, haha.

2·1 year ago

2·1 year agoA shortlist:

- it has the best lossy image compression (not counting extremely low bitrate images, where AVIF starts to win)

- it can losslessly recompress JPEGs for a free 20% space savings - no image quality loss

- it supports parallel decoding for extra speed

- it supports progressive decoding (viewing a lower quality version of the image while it loads), unlike WebP/AVIF which just “pop up” when you’ve downloaded the whole thing

- it supports lossless

- it compresses lossless extremely well (notably unlike AVIF and PNG which fall on their face with lossless compression)

- it supports animation (though AVIF is generally a better format for animation, because it’s based on a proper video codec)

- it supports HDR

- it has a very strong resilience against generation loss (the classic “JPEG degradation” of resaving images)

- it is royalty-free

- it otherwise has roughly every image format feature we’ve ever thought of included in its spec

If JXL is not the next image format then we will never ever get rid of JPEG and PNG. There has never been a more obviously superior image format in history.

This might help: Image format comparison table

FS TAB - 3 syllables

F STAB - 2 syllables

The choice is clear.

I didn’t mean my post to be read as trying to convince someone to use Linux, but as someone trying to convince themselves to use Linux. It’s fairly common that people want to switch but have convinced themselves that unless they have their exact same workflow from Windows they won’t be able to.