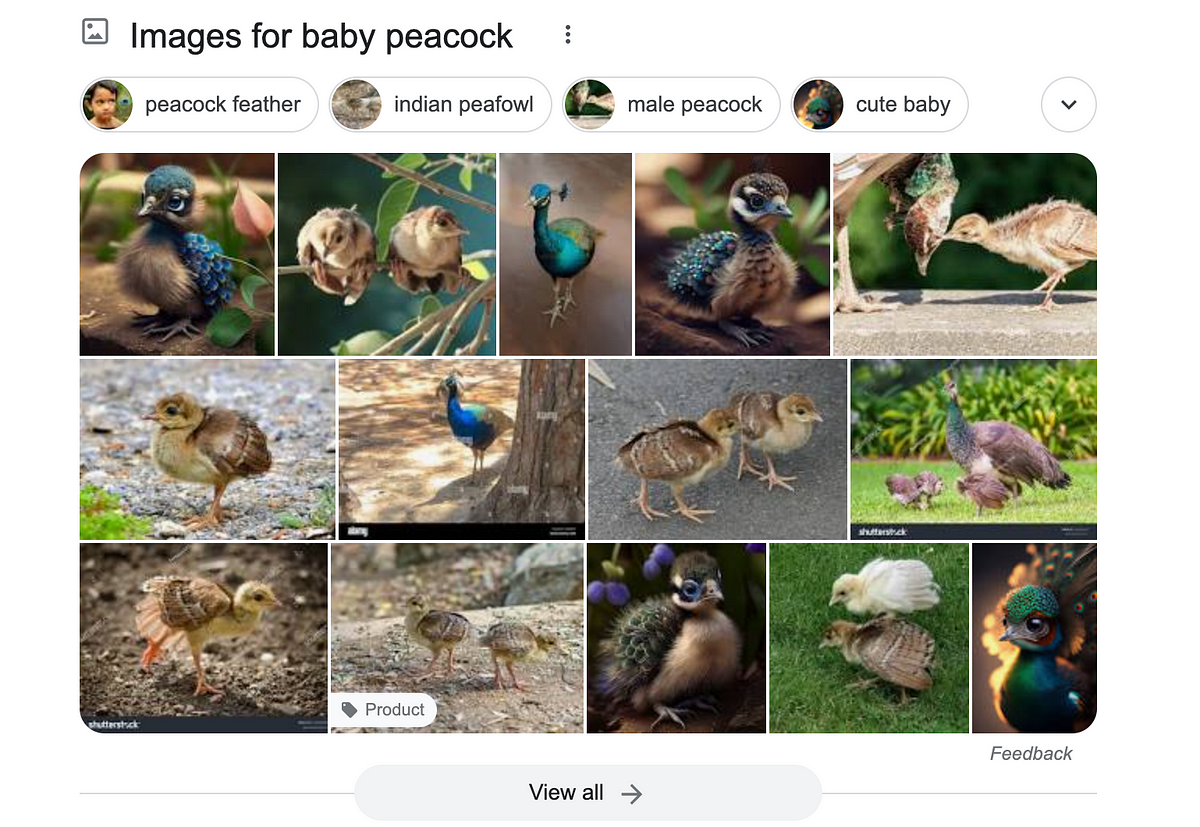

Old article but still relevant. If you search on google, you will see some of the same AI art.

You must log in or register to comment.

Synthetic media should be required to be watermarked at the source

Bit late for that (even in 2023). Best we could do now is something like public key cryptography, with cameras having secret keys that images are signed with. However:

- That would require people to purchase new cameras (though phones could likely do this without a new device, leveraging the secure enclaves to sign)

- Depending on the implementation of the signing, even applying filters to images, color grading, or cropping an image could make it stop matching. If you remove something from the background or make other overt changes, it’s definitely not going to match.

- Adobe has a system for handling changes and attesting that no AI was used. Optimally other major photo editing tools will do something similar. However, I don’t think it’s feasible to securely sign such an attestation history locally, so all such images would need to be uploaded to be signed remotely.

- This won’t work for traditional art

For artists and photographers with old school cameras (“old school” meaning “doesn’t compute and sign a perceptual hash of the image”), something similar could still be done. Each such person can generate a public / private key pair for themselves and sign the images they’ve created manually. This depends on you trusting that specific artist, though, as opposed to trusting the manufacturer of the camera used.